THE AUDITORY MODELING TOOLBOX

Go to function

demo_may2011

Demo of the model estimating the azimuth angles of concurrent speakers

Description:

demo_may2011 demonstrates the model estimating the azimuth angles of three concurrent speakers. Also, it returns the estimated angles.

Set the variable demo to one of the following flags to show other conditions:

- 1R: One speaker in reverberant room.

- 2: Two speakers in free field.

- 3: Three speakers in free field. This is default.

- 5: Five speakers in free field.

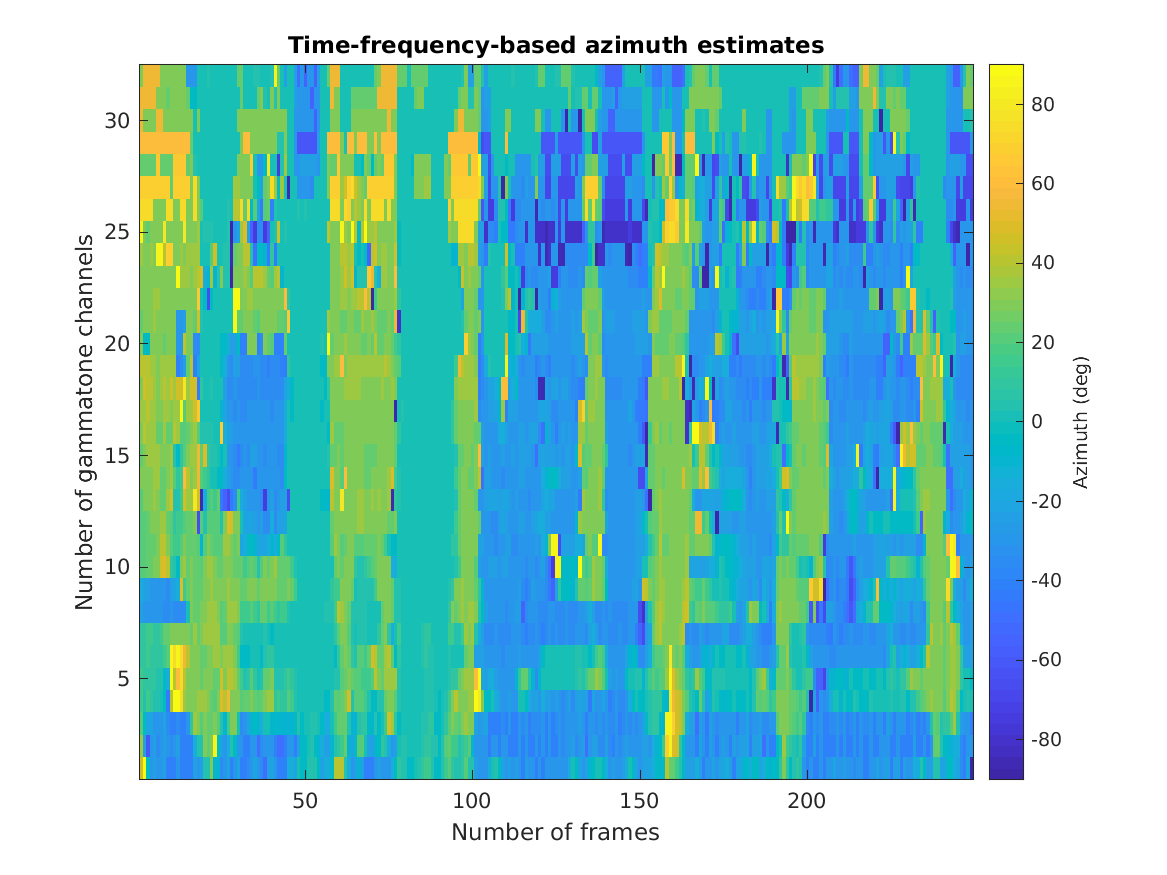

Time-frequency-based azimuth estimates

This figure shows the azimuth estimates in the time-frequency

domain for three speakers.

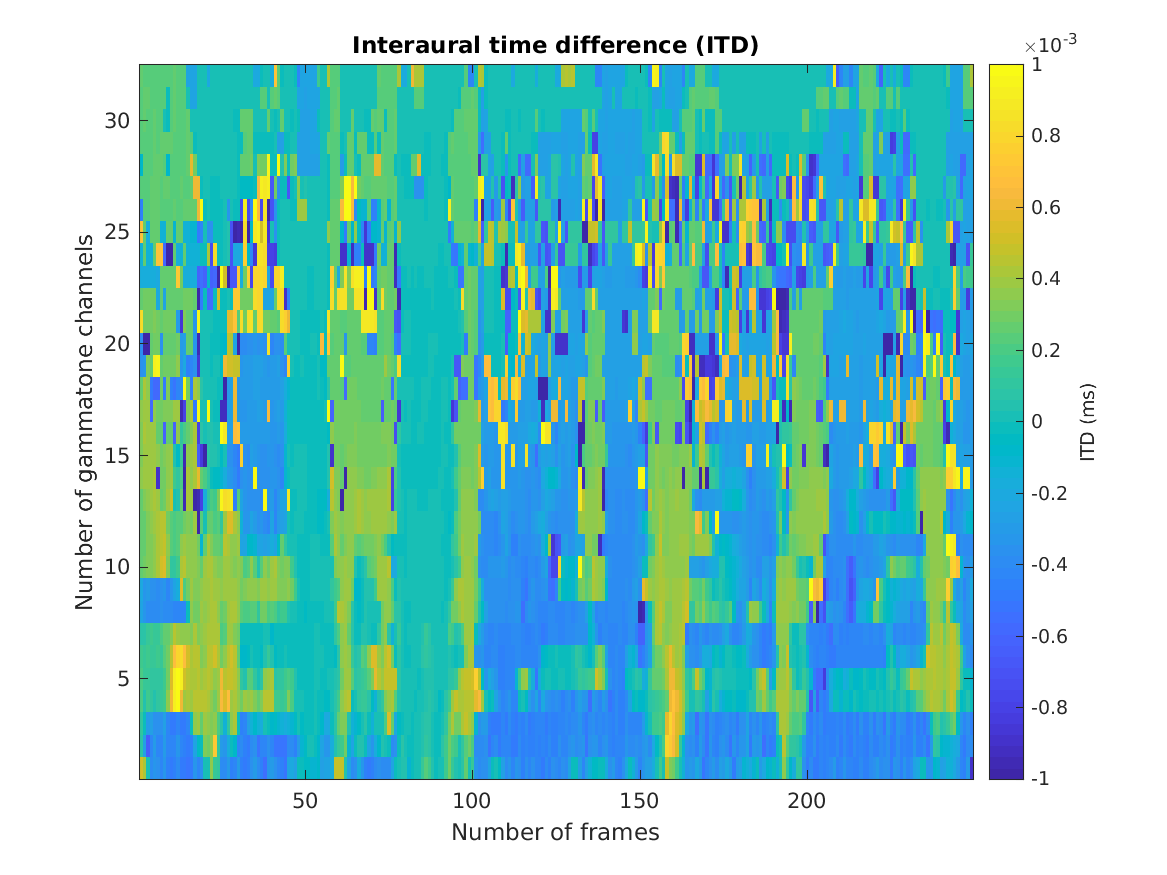

Interaural time differences (ITDs)

This figure shows the ITDs in the time-frequency domain estimated

from the mixed signal of three concurrent speakers.

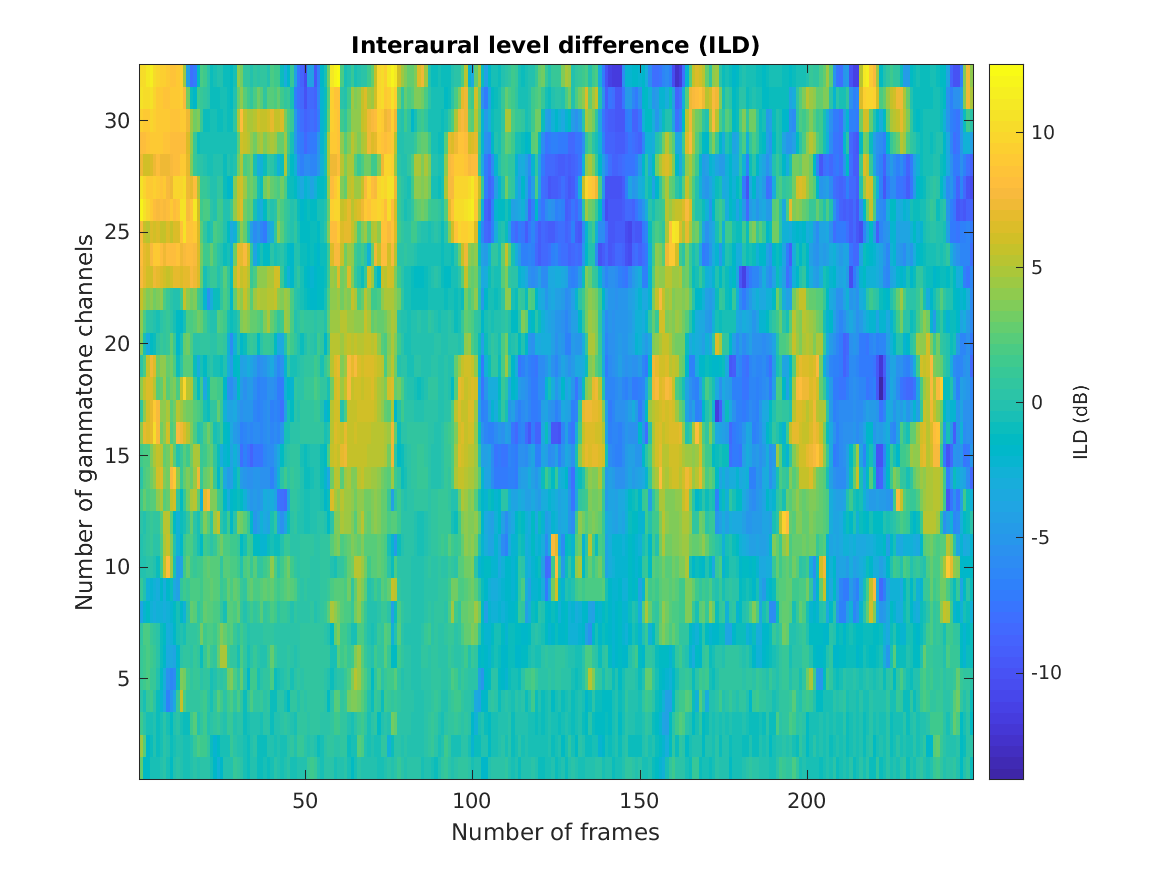

Interaural level differences (ILDs)

This figure shows the ILDs in the time-frequency domain estimated

from the mixed signal of three concurrent speakers.

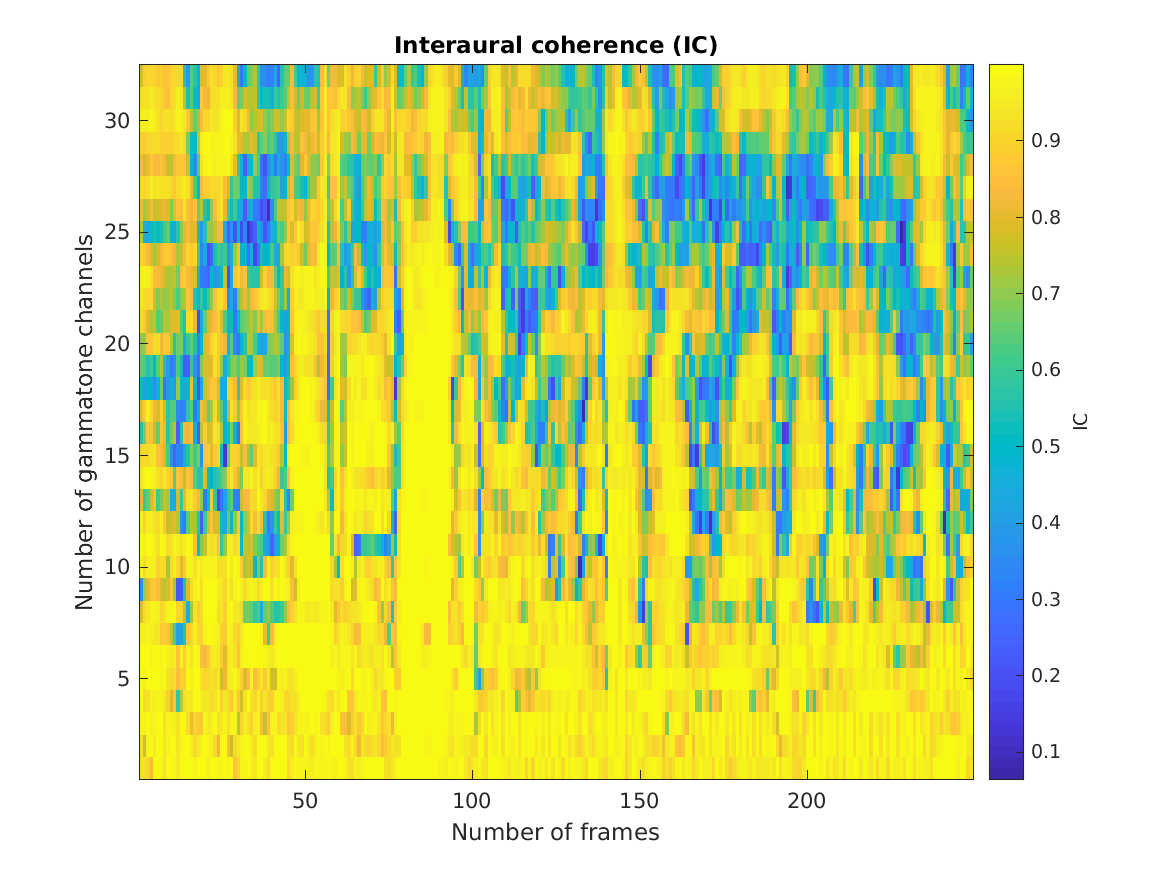

Interaural coherence

This figure shows the interaural coherence in the time-frequency domain estimated

from the mixed signal of three concurrent speakers.

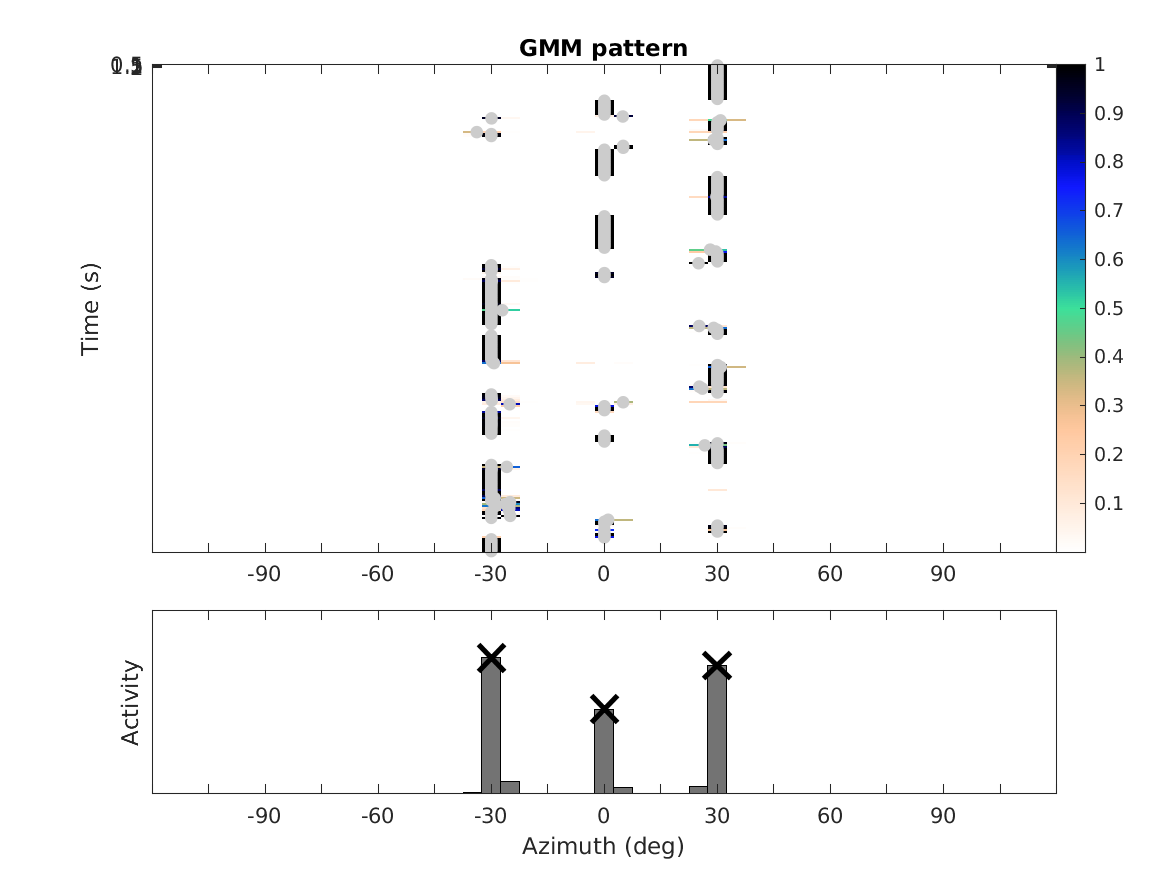

GMM pattern

This figure shows the pattern and the histogram obtained from the

GMM-estimator for the mixed signal of three concurrent speakers.

This code produces the following output:

The estimated azimuth angles are: -29.8977 29.9242 0.0925926 degrees

References:

T. May, S. van de Par, and A. Kohlrausch. A probabilistic model for robust localization based on a binaural auditory front-end. IEEE Trans Audio Speech Lang Proc, 19:1--13, 2011.